The focus of quality control strategies should be on patient outcomes, not technology

Laboratories use quality control (QC) procedures to assure the reliability of the test results they produce and report. According to ISO 15189, the International Organization for Standardization’s (ISO) document on particular requirements for quality and competence in medical laboratories, “The laboratory shall design quality control procedures that verify the attainment of the intended quality of results.”1 That is, laboratory QC procedures should assure that the test results reported by the lab are fit for use in providing good patient care. Unfortunately, there is often a temptation to focus on the instruments being used in the lab and the “big picture” view of patient outcomes can get forgotten when QC programs and strategies are designed.

Laboratory QC design

The primary tool used by laboratories to perform routine QC is the periodic testing of QC specimens, which are manufactured to provide a stable analyte concentration with a long shelf life. A laboratory establishes the target concentrations and analytic imprecision for the analytes in the control specimen assayed on their instruments. Thereafter, the laboratory periodically tests the control specimens and applies QC rules to the control specimen results to make a decision about whether the instrument is operating as intended or whether an out-of-control error condition has occurred.

Traditionally, laboratories determine how many control specimens to test and what QC rules to use based on a desire to have a low probability of making the erroneous decision that the instrument is out-of-control when it is, in fact, operating as intended (false rejection rate) and a high probability of making the correct decision that the instrument is out-of-control when indeed an out-of-control error has occurred (error detection rate).

Clearly, the in-control or out-of-control status of a laboratory’s instruments influences the reliability (quality) of the patient results reported by the lab. Laboratories tend to design QC strategies with a focus limited to controlling the state of the instrument rather than controlling the risk of producing and reporting erroneous patient results that could compromise patient care.

A focus on the patient

One approach that more directly focuses on the quality of patient results (rather than the state of the instrument) is to design QC strategies that control the expected number of erroneous patient results reported because of an undetected out-of-control error condition in the lab.2 What do we mean by “erroneous” patient results? The quality of a patient result depends on the difference between the patient specimen’s true concentration and the value reported by the laboratory. We define an erroneous patient result as one where the difference between the patient specimen’s true concentration and the value reported exceeds a specified total allowable error, TEa. If the error in a patient’s result exceeds TEa, we assume it places the patient at increased risk of experiencing a medically inappropriate action.

How does a laboratory decide what the TEa specification for an analyte should be? This is not a simple question to answer, but a number of different lists of TEa specifications that include hundreds of analytes have been produced and are available from a variety of sources.

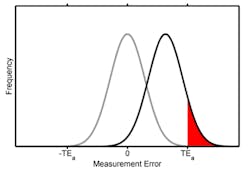

Once a TEa for an analyte has been specified, then for any possible out-of-control state, the probability of producing patient results with errors that exceed the TEa can be computed, as demonstrated in Figure 1. The gray curve represents the frequency distribution of measurement errors for an instrument operating as intended. The distribution is centered on zero and the width of the distribution reflects the inherent analytical imprecision of the instrument. The black curve represents the frequency distribution of measurement errors after a hypothetical out-of-control error condition has occurred. The out-of-control error condition causes the instrument to produce results that are too high.The area under the out-of-control measurement error distribution that is either greater than TEa or less than -TEa (shaded in red) reflects the probability of producing an erroneous patient result. In this case, 10% of the area under the curve is shaded red. If an out-of-control error condition of this magnitude occurred, then while the instrument was operating in this state, we’d expect 10% of the patient results produced to be erroneous.

The expected number of erroneous patient results reported while an undetected out-of-control error condition exists will not only depend on the likelihood of producing erroneous results in the presence of the error condition, but also on how many patient results are produced before the error condition is detected and corrected. This is illustrated in Figure 2.In this example, each vertical line represents a patient specimen being tested on the instrument. Each diamond represents a QC event where QC specimens are tested and QC rules are applied. A green diamond implies the QC results are accepted; a red diamond means the QC results are rejected. At a point between the second and third QC event, an out-of-control error condition occurs, causing a sustained shift in the testing process (such as shown in Figure 1). Given the magnitude of this particular out-of-control condition and the power of the QC rules, the error condition isn’t detected until the third QC event after it occurred. Each red asterisk denotes an erroneous patient result that was produced during the existence of the out-of-control error condition.

Notice some of the important relationships between QC events, the number of patients tested between QC events, and the number of erroneous patient results illustrated in Figure 2:

Not all the results produced during an error condition are unreliable (the probability of producing an unreliable result during an error condition increases with the magnitude of the error).

QC events do not always detect an error condition on the first try (the probability of a QC event detecting an error condition depends on the error detection rate of the QC rule, the number of control samples used, and the magnitude of the error).

If the error condition in the example was smaller, we would expect proportionally fewer of the results tested during the undetected error condition to be unreliable (red asterisks in Figure 2), but more QC events needed to detect it. Conversely, if the error condition in the example was larger, we would expect proportionally more of the results tested during the undetected error condition to be unreliable, and fewer QC events needed to detect it. If the error condition were large enough, all of the patient test results after its occurrence would be unreliable and it would almost certainly be detected at the first QC event (third diamond in Figure 2).

In summary

QC that focuses on the instrument is concerned with the likelihood that a QC rule will trigger an alert after an error condition has occurred (the probability of a red diamond in Figure 2). Instrument-focused QC strategies are designed to control the number of QC events required to detect an error condition.

QC that focuses on the patient, on the other hand, is concerned with how many erroneous patient results are produced while an undetected error condition exists (the number of reds asterisks in Figure 2). Patient-focused QC strategies should be designed to control the number of erroneous patient results produced before the error condition is detected.

References

- International Organization for Standardization: Medical laboratories - particular requirements for quality and competence. ISO 15189:2012. International Organization for Standardization (ISO), Geneva.

- Parvin CA. Assessing the impact of the frequency of quality control testing on the quality of reported patient results. Clin Chem. 2008;54:2049-54. doi:10.1373/clinchem.2008.113639.

About the Author

John Yundt-Pacheco MSCS

is the Senior Principal Scientist at the Quality Systems Division of Bio-Rad Laboratories. He leads the Informatics Discovery Group, doing research in quality control and patient risk issues. He has had the opportunity to work with laboratories around the world — developing real time, inter-laboratory quality control systems, proficiency testing systems, risk management and other quality management systems.

Curtis Parvin, PhD

is retired from Bio-Rad, where he was Manager of Advanced Statistical Research. Prior to joining Bio-Rad, Parvin was the Director of Informatics and Statistics at the faculty of Washington University School of Medicine.