Microsatellites and VNTR typing in clinical settings

Earning CEUs:

For a printable version of the May CE test go HERE or to take test online go HERE. For more information, visit the Continuing Education tab.

MAY LEARNING OBJECTIVES

Upon completion of this article, the reader will be able to:

1. Recall the overall biology of microsatellites and the events that take place that lead to random diversity of genetic markers.

2. Discuss the technique involved in VNTR loci evaluation.

3. Describe the clinical applications in which VNTR typing is used.

4. Discuss the benefits of VNTR typing.

One application of molecular technology is in what is colloquially referred to as, “DNA fingerprinting” or “DNA profiling.” The fingerprint analogy is an apt one, as the method generates a reproducible biometric pattern which is unique to an individual yet provides little or no phenotypic information about the source (gender being the one common exception, as detailed further below). The method also provides data which can be used to calculate relatedness between samples. Beyond its common appearance in fictionalized (and real) criminal forensics settings, the capability to link specific trace fragments of human tissue to individuals and to objectively determine relatedness between samples is of real use in anthropological studies and—more to our focus—mundane clinical settings. The most common method for DNA profiling is known as Variable Nucleotide Tandem Repeat (VNTR) typing and will be our present focus.

Terminology

First, some terminology. Within the chromosomes comprising the nuclear DNA of most organisms including humans, there are often intergenic noncoding regions which consist of multiple tandem (head to tail) repeats of a single short (most commonly 2, 3, 4, or 5 base) element. Examples would be (ATT)n or (GTTAC)n, where the parenthetic set of nucleotides repeats in a row n times. These elements are referred to as microsatellites. The name derives from the dark ages of molecular biology when preparative isolation of DNA included buoyant density ultracentrifugation on CsCl gradients. Due to its uniform composition, sheared DNA fragments of microsatellite DNA forms small, distinct bands slightly separated from non-repetitive DNA material. When the number of element repeats (n) exceeds about 50, similar but slightly larger unique bands are formed, and these longer elements of similar structure are called minisatellites. To keep things unnecessarily complicated, microsatellites are also sometimes called Short Tandem Repeats (STRs) or Simple Sequence Repeats (SSRs), although this latter term is more frequently applied in plant genetics. Finally, both microsatellites (by any name) and minisatellites are collectively referred to as VNTRs. In practice the terms STR typing, microsatellite typing, and VNTR typing are often used interchangeably—a looseness of terminology which can sometimes lead to confusion where people look for differences in meaning that aren’t intended.

Biology

If there’s enough of them to see as distinct bands on CsCl gradients, it should come as no surprise that there are many microsatellites in the human genome. Overall, they comprise about three percent of the total human nuclear genome, dispersed more or less uniformly across all chromosomes. Ones based on odd number elements (three or five nucleotides) are about half as common as ones with even number elements (two, four, or six nucleotides) and for unknown reason—or if there even is a reason—triplet SSRs are most common in association with coding regions. It’s unclear if microsatellites have a consistent useful biological function, in most cases either appearing to do nothing or else being pathogenic. Pathogenic examples related to trinucleotide repeats have their own collective name as trinucleotide repeat disorders. One of the best-known examples of these is Huntington’s disease. In this case, the repeat element CAG occurs within an exonic (protein coding) portion of the HTT gene with each repeat coding for an additional glutamine residue in series in the mature Huntingtin protein. At n< 26, protein behavior is normal and there is little risk to offspring, but as n increases (and thus the number of glutamine residues present in a row in the protein) there is a progressive loss of proper gene function evidenced by increased risk of Huntington’s disease in offspring, risk of Huntington’s disease in the proband, and then increasing severity of disease and decrease in age of symptom onset. At approximately n>40, full penetrance (worst case presentation) is observed and a 50 percent risk of disease transmission to offspring.

For our current consideration, what’s of key importance here is that the repeat numbers (n) for a single microsatellite locus can sometimes change between generations. One mechanism by which this occurs is known as polymerase slippage. Essentially, this happens during DNA replication if polymerase activity pauses and the nascent strand briefly detaches from template before reannealing; it may anneal in register with regard to the repeat element either upstream or downstream of where it detached. As polymerase activity now resumes the progeny strand has either gained or lost copy numbers of the repeat, respectively. It is observed that gain of repeats occurs much more frequently than loss. Considering this mechanism, it further stands to reason that the longer a microsatellite is, the greater the chances for a slippage event to occur during replication. Overall there is thus a trend toward getting longer over time, and the degree of instability increasing as they get longer, in effect creating a positive feedback process. This does not on evolutionary timescales become a runaway process and must be constrained by loss of organism fitness as more and more chromosomal regions become endless short repeats.

Another mechanism which can lead to changes in mini and microsatellite element repeat number is unequal crossing over. Readers are reminded that during meiosis, breakage and rejoining of homologous chromosome pairs allows for the recombination of alleles. These breakages and joins occur along homologous sequence elements on the two chromosomes, but as in the case of polymerase slippage it is possible for a shift in register by some multiple of the repeat element size to occur during strand alignment. The resulting recombined chromosomes will have one which has gained repeats while the other has lost an equal number. In common with polymerase slippage, this type of instability is increasingly likely to occur as the length of the region—that is, the number of repeats n—increases.

It’s important that there are mechanisms for change in repeat copy number (this creates differentiable markers over time) and that such changes are individually quite rare, occurring on an evolutionary timescale (meaning that we can expect in almost all cases, alleles sizes remain fixed between generations). Overall, we are presented with a large and disperse set of VNTRs across the genome, and we should expect them to behave as fixed in size when going from parent to progeny. This randomness of dispersion across the genome, relatively high population diversity per locus, and low frequency of further variation between organism generations underlies their utility as genetic markers.

Technique

In practice, VNTR loci for evaluation are selected based on having highly conserved flanking sequences; a large number of repeat number sizes seen across the population of interest (in other words, high allelic diversity); and good amplification behavior (reliable amplification of true loci across a wide range of input concentrations, few or no spurious amplification products). For ease of use and convenience in assay performance it’s further desirable to multiplex loci into single amplification reactions, so loci with dissimilar product sizes (that is, non-overlapping products) and similar amplification kinetics and common reaction requirements are selected to group together. For each individual VNTR locus, PCR primers are selected which meet these criteria, and one PCR primer of the pair for each locus is fluorescently labelled. As the end detection method will support five separable dye channels, a total of four target dyes are available, meaning that the requirement above for amplicons of dissimilar size is not absolute, when amplicons of two loci may be of overlapping size, they can be differentially dye labeled. By a combination of differentiable expected product sizes and use of different dyes where sizes might overlap, it is possible to multiplex 15-20 different loci in a single reaction. (Technically, challenges in making so many competing reactions amplify with near equal efficiency may make it simpler to limit individual PCR reactions to four to six loci per multiplex set, and then combine products of several such reactions for the detection step.)

Considering for a moment just one of our loci, if we PCR amplify it in a diploid organism such as a human, we expect products from two alleles—one on each host chromosome pair. For each locus we expect to get either no amplicon (in the event the locus is deleted), or a product of a size which is determined by the number of repeat elements present in the microsatellite. If we take a triploid repeat as an example and assume some space for flanking sequences where our PCR primers are located, we might come across a population sample at this locus see amplicon sizes of 112 to 130 nucleotides in length at 3 nt intervals. Each of these discrete sizes will occur at some frequency in the population. If we extend this to the diploid situation, we expect to see no amplicon (homozygous locus deleted, both alleles); one size product (where both alleles happen to be homozygous for the same repeat number); or two products (where the alleles are heterozygous for repeat number). In any case you can either score no, one, or two products and the products if present will be at known, predetermined sizes.

A common nuance to the methodology is the addition of a final longer PCR extension step which allows for uniform monoadenylation of all products (the DNA polymerase commonly used in this application has a tendency to add a single “A” to 3’ ends of amplicons). If this is not allowed to go to completion, the end product is some mix of blunt and monoadenlyated products, giving rise to two product bands one bp apart. The longer final extension encourages all products to be monoadenylated and thus generate a single uniform peak—a nice thought but one which in reality is also influenced by adjacent sequences, meaning individual VNTR loci tend to have distinctive “personalities” as evidenced by characteristic ratios of these two peaks, or sometimes even a descending series of “stutter” products—evidence of DNA slippage at the locus during PCR.

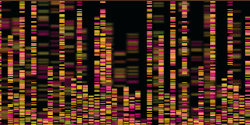

From an instrumentation perspective, we can use capillary electrophoresis (conveniently available in the form of trusty old Sanger sequencing machines) to reliably separate these products by size and color, with sizing accuracy assisted by inclusion on a dedicated dye channel of a size standard in each reaction.

Now if we extend this to a number of such loci and can identify the product(s) from each by some combination of dye label and/or size, we can generate a vast number of possible combinations of product size and color patterns—each reproducibly generated by one individual’s template DNA. With enough such loci combined, not only are the results reproducible per individual, but also unique (or approaching unique, to some astronomically high probability) to that individual. With the loci commonly used for human VNTR typing, only some 12-15 markers are needed to reach acceptable levels of “uniqueness.”

Interpretation

At least two stages of interpretation of the data occur. The first is in directly examining the capillary electrophoresis data for expected markers, and “binning” the data. As we now understand, each product can occur in multiple pre-set sizes based on the repeat element length; we’ve also an inkling that not all loci are well behaved and may have split peak (incomplete monoadenylation) or stutter issues. While this may sound complex, in reality it becomes fairly straightforward to interpret results and call each locus as either null (homozygous deleted), heterozygous with two size values, or homozygous with a single size value. (Some among you may note there’s another possibility, hemizygous deletion. This will also show as a single peak for the one present allele size, and while the peak area may be less than would be expected for a homozygote of that size, we generally can’t detect or call this with certainty and could erroneously call this homozygous. Loci are chosen such that deletions are very rare and so this condition isn’t expected to be observed.)

Note that because we’re looking for peaks at known, expected sizes—and these differ by at least two and more commonly three, four, or five nucleotides—minor variations in migration due to e.g. differing ionic strength between samples are not significant. Sizes as reported by the instrument are “binned” to the closest matching size; an apparent 161.7 bp product when there’s known 161 and 166 alleles can be comfortably called as 161.

By carrying out this scoring/binning on all marker sets, an ordered series of values is generated. While the exact number depends on the set of markers chosen, as an example one common commercial iteration of this method uses 15 classical VNTR loci (yielding 30 values) and one additional loci, which while not strictly a classical VNTR can be treated as one and yields two more values (which indicate whether the source material was male or female) for a total of 32 values. This set of values is the sample “fingerprint.”

Applications

Where do we find VNTR typing used in clinical (non-forensic) settings today? They can be applied any time we wish to link two samples, with two of the most common settings being:

- Paternity testing. Barring any de novo changes in repeat copy number, a child should inherit one half of their VNTR markers from each parent. The appearance of even a single locus in the offspring bearing a size not represented in the putative parents, means either that a de novo size change has occurred (possible, but rare) or much more likely, that one (or both) parents isn’t as thought. Given the total number of loci examined and the population diversity at each locus, it would be vanishingly rare for someone to not show at least some diversity from a nonrelated putative father. Of course, it goes without saying that all of this can be applied maternally as well, however paternity testing is more commonly encountered than maternity testing.

- Sample tracking, particularly in anatompathology for tissue blocks. In some labs, this is employed as a means to ensure that FFPE tissue samples can unequivocally be traced to patient of origin.

Benefits

Finally, let’s ask ourselves why this technology—developed some 20 years ago, in a field where new technologies emerge and frequently replace older methods—remains relevant and in front line use today. The answer is that a number of factors contribute to the method’s inherent robustness, accuracy and reliability at low cost and simplicity. Some of these attributes include:

- Low sensitivity to contamination. Because the readout method (capillary electrophoresis) provides both peak size in base pairs (the measured attribute) and a peak height or peak area, it’s generally possible to clearly differentiate signals of a majority component from trace contaminant signals. In effect, the PCR reactions at the target loci from one template are directly competing with the homologous loci from a contaminating template. As amplification efficiencies are essentially equal, the relative peak sizes from majority and contaminating template(s) more or less linearly reflect relative starting concentrations. In other words, if a contaminant is 10 percent the prevalence of majority template, its VNTR peaks will be 10 percent the size of the majority peaks. This both allows for ready assessment of whether a sample contains a single template or multiple templates, and in most cases where low level contamination exists, to read out the majority signal. In the event that a sample contains multiple genomes at near equal levels, while assignment of markers to correct the source may not be possible, it’s at least immediately apparent that multiple genomes are present (when multiple markers appear triploid or tetraploid, it’s a mixed sample). The likelihood of an undetected sample contamination or mixture is very low.

- Very high sensitivity. Because classical PCR is employed to amplify each of the marker loci, sensitivities down to single copy are possible. Note however that as sensitivity demands are increased, the resistance to confusion by contamination decreases as contaminant concentrations are more likely to approach those of desired target.

- Good performance on poor quality DNA. Because the individual amplicons are quite short (usually, 80-250 bp or so), DNA which has been damaged and fragmented is often still quite suitable for this method; the shorter the amplicons are, the lower the likelihood that a DNA break or chemical crosslink has occurred between primer sites at any given locus.

- Utility of partial results. In the case of extremely low template levels and/or very serious DNA damage, it’s possible that not all loci may provide results. While this reduces the identification power of a result, it’s by a measurable amount. Loss of just one or two markers from a sample—a partial DNA fingerprint—can still provide informative (even astronomical) levels of certainty in a relatedness result.

- The data sets generated are small and easily manipulated. In the example given above, for a 16 locus test, a complete result is just a string of 32 numbers; a tiny amount of data by computational standards, allowing for easy storage of immense numbers of fingerprints in a single database and very fast search and comparison algorithms. In terms of data manipulation to measure degree of relatedness between samples, the mathematics is well established, requires little computational capacity, easily handles large data sets, and can be visualized in straightforward ways like phylogenetic trees.

- Low infrastructural and per-assay cost requirements. The actual instrumentation requirements for this method are low; almost any MDx lab has the required equipment on hand. (Depending on particular application of the method the majority costs are more likely to be related to chain of custody tracking than actual assay performance.)

- Rapidity and ease of automation. Ease of automation is self explanatory; in terms of assay performance speed, it’s a process of DNA extraction, PCR, capillary electrophoresis, and interpretation. In theory it’s possible to carry out all steps in a single work day with limited hands on time.

That’s not even an exhaustive list of all the good performance attributes of the method, but already enough to understand why the method hasn’t been supplanted and frankly, isn’t likely to be any time soon. It’s a cheap, reliable, tried-and-true way to link DNA specimens to individuals (or to each other) and to assess inter-relatedness between samples—useful in all the ways noted above.

About the Author

John Brunstein, PhD

is a member of the MLO Editorial Advisory Board. He serves as President and Chief Science Officer for British Columbia-based PathoID, Inc., which provides consulting for development and validation of molecular assays.