Digital management of quality control: a critical tool for the modern lab

Quality control (QC) remains one of the most important tasks of the medical laboratory to ensure the reliability and accuracy of reported patient results. Whenever results are sent to physicians that need to be corrected, or any time prolonged quality troubleshooting is necessary within the laboratory, it can affect patient safety, laboratory credibility, operating costs, turnaround times, and regulatory or accreditation compliance. Recently, the industry buzz related to QC has focused on the concept of risk management, most notably CLSI’s EP23 and CMS’s IQCP. When applied properly, risk management can help minimize the risk of reporting incorrect patient test results.

Minimizing the risk of reporting incorrect patient test results starts with good laboratory protocols, including proper calibration and maintenance of laboratory instruments. Modern laboratories are under pressure from many directions: reducing turnaround times, managing staffing shortages, assimilating new laboratory automation options to handle burgeoning test volumes, and coping with leaner budgets. Laboratories now rely on automated quality control software programs to provide fast and reliable evaluation of results.

One of the most important attributes of a real-time quality control reporting system is the ability to capture and process QC data automatically from laboratory information systems (LIS) or middleware systems. Laboratories cannot afford to lose time waiting for the green light to begin testing patient samples. In today’s environment, it is not possible to use paper Levey-Jennings charts in which laboratorians manually plot the QC results or manually enter results in long spreadsheets. Laboratories need QC data management with connectivity solutions that will integrate seamlessly within their workflow for real-time results. The best solutions include bi-directional connectivity that automatically directs instruments to stop reporting results for QC failures even before a laboratorian has seen a result. This technology is called auto-verification.

Digital management of quality control data provides opportunities and benefits for laboratories, starting with the design of the QC process. Laboratory staff can use new integrated algorithms for the selection of the most appropriate QC rules to detect clinically significant errors, minimizing the risk of reporting incorrect patient results. With current data management solutions, labs no longer have to rely on easy-to-remember but poor rule selection for all tests, such as 1 QC result out more than 2 standard deviations (1-2s). Modern laboratories now base their QC design upon their bias, imprecision, and selected total allowable error for each analyte. In order to estimate the bias for each analyte, participation in an interlaboratory program or proficiency testing (PT) program is necessary. Some software is capable of transmitting the QC or PT results directly to the corresponding interlaboratory program in order to complete the QC design as part of an integrated process.

Once the QC process has been designed, it is important to review and manage trends at regular intervals to judge the effectiveness of the process. QC is not a static process but rather a dynamic evolving system. New instrument reagent lot, new calibrator lot, new QC lot, instrument maintenance, and many other factors can all influence and modify the behavior of testing systems over time. The use of multiple instruments or modules in the laboratory environment is also a contributing factor. Detecting changes and estimating influence on patient results has become an important part of the process.

It is not the large shifts that should be the top concern in a laboratory today, since these shifts can be detected relatively quickly and corrected before they can do any harm. The top concern should be the moderate to small shifts that go undetected for a longer period of time, only affecting a few results each day. Those errors are the ones that will go unnoticed for a longer time and might affect some results and ultimately patient safety. In order to detect these moderate shifts, laboratorians can use automated tools that are often integrated in QC data management software packages. Data analysis grids can help to compare the differences between instruments and indicate the size of errors. Multiple Levey-Jennings charts displayed next to each other or overlaid in one complete chart can help identify trends or shifts across instruments. These charts can be created by QC level or across QC levels to determine whether there are systematic errors in the test system or just random errors.

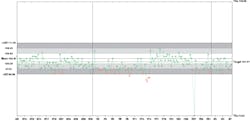

In addition to these statistical tools, laboratorians can also use quality specifications and analytical goals to evaluate whether shifts or trends are clinically significant. The use of regulatory or scientifically based specifications such as CLIA or biological variation can add valuable information about test criteria that might not be set appropriately and thus create unnecessary repeat QC testing, instrument calibration, and troubleshooting. These quality specifications can also be included on the Levey-Jennings charts and are powerful visual tools to evaluate test performance (Figure 1).

Click on image to expand.

To monitor operational performance and quality over time, dashboards can provide information that is clear and easy to act upon for the most critical issues and failures. Laboratory staff can review QC data and add corresponding actions and comments to the QC results for audit trail documentation.

With the use of these new integrated technologies comes the risk that in case of a connectivity failure, results could be unreported to the QC Data Management program. Advanced QC tools can alert users, scanning the program at fixed intervals to verify the presence of the QC results. If results are missing, alerts are displayed in the program and email notifications sent to laboratory staff. All aspects of these features are tracked in an audit trail that provides complete traceability. This is an important step for regulatory and accreditation purposes. Laboratories can easily generate reports that can be shared with an auditor or filed for future inspections.

Digital programs are able to more quickly integrate new QC concepts. Several new developments for QC are gaining popularity and will change workflow and design. There are significant advancements being made in the areas of risk management, QC frequency determination, measurement uncertainty, patient moving averages, and much more. Integrating these concepts into a digital program can help laboratories more rapidly adopt new QC practices and tailor processes to their current infrastructure. Future digital solutions may include modules for method evaluation or other less frequent statistical evaluations such as linearity assessments, contamination or carry-over studies, detection limits, and so on. These data management tools are all part of a digital QC management solution.

The integration of digital management of QC into the modern laboratory is critical. Not only does it allow for real-time decisions, but additional features such as QC design, risk management, data analysis, audit trails, reports, graphical representations, and interlaboratory participation are all part of a complete data management system.

About the Author

Nico Vandepoele, BSc

serves as Scientific and Professional Affairs Manager for Bio-Rad Laboratories Quality Systems Division. He works to promote an understanding of laboratory regulations and best practices as they pertain to QC and EQA/PT programs.