Reducing errors in the clinical laboratory

Earning CEUs

For a printable version of the April CE test go HERE or to take test online go HERE. For more information, visit the Continuing Education tab.

LEARNING OBJECTIVES

Upon completion of this article, the reader will be able to:

1. Recall the effects and solutions of the laboratory shortage and increased amount of test output.

2. Discuss opportunities for improving per-analytical specimen quality processes.

3. Discuss ways to develop an acceptable QC program for the analytical phase of testing.

4. Discuss the development of a proper QC program for establishment of improving QC in the post-analytical phase of testing.

The goal of a clinical laboratory is to deliver medically useful results for patients on a timely basis. This goal can be hindered by the new paradigm of the modern laboratory – “do more with less.” Testing volumes continually increase while staffing levels decrease; in some labs, they are a third of what they were 15 years ago, and yet testing volume has soared. One of the ramifications of the drive to do more with less is a greater reliance on automation in most aspects of the clinical laboratory, from pre-analytical receipt and preparation of patient specimens to auto-verification and delivery of the results.

As our reliance on automation grows and human inspection continues to play a lesser role in the production of clinical diagnostic results, the need to put in place systems and monitors to ensure the quality of the specimen results grows. Most clinical laboratories have a quality management program in place, as it is part of good laboratory practice and is usually a regulatory or accreditation requirement. As part of the quality program, laboratories look for continuous improvement opportunities. This article presents suggestions for improving laboratory quality assurance and quality control practices in the pre- and intra-analytical phases of testing, along with ideas for using post-analytical data to improve laboratory quality practices.

Opportunities for improving pre-analytical quality

The pre-analytical phase is the most problematic laboratory testing process, representing up to 70 percent of laboratory testing errors.1 Regardless of a laboratory’s exact error rate, since the pre-analytical phase represents the most error-prone phase, the total testing process would be greatly enhanced with a reduction in these errors. However, many of the steps in the pre-analytical process take place outside of the control of the laboratory itself, which has made reducing errors particularly challenging.

Test ordering and sample transport are processes that are often performed by non-laboratory personnel, and while in-lab sample collection may be performed by trained medical technologists, many samples come to the lab that were drawn by external staff. Internal processes like sample preparation for testing or transport within the laboratory are subject to error; however, focusing on processes outside the laboratory is important, as they have six times the error frequency of those within.2 Many of the errors external to the lab cause an increase in hemolysis, which represents up to 70 percent of all unsuitable specimens.3

Hemolysis can affect sample testing from the release of intracellular components, proteolysis, and other mechanisms that interfere with instrumentation processes. Poor sampling techniques are the largest contributor to hemolysis and are often the focus of laboratory efforts to reduce hemolysis rates. An often overlooked and uninvestigated source of hemolysis is sample transport, that being transportation from the draw site to the lab, and ultimately to the testing instrument. Technological advancements now enable both the identification of potential causes of hemolysis and automated detection .This allows the reduction of hemolyzed samples coming into the laboratory, while ensuring they are detected before erroneous results are reported out.

Transportation is a major cause of in vitro hemolysis.1 Clinical Laboratory Improvement Amendments (CLIA) regulations require that laboratories monitor, evaluate and revise the criteria established for specimen preservation and transportation, and ISO standards require that laboratories have a documented procedure for monitoring the transportation of samples. This has been difficult, if not impossible to achieve. Therefore, the focus has been on validating the transportation process and, unless large anomalies occur, the laboratory often assumes that the system continued to function properly. Fortunately, technology is now catching up to requirements, and along with it, the opportunity to monitor transportation systems on a regular basis and decrease the concomitant hemolysis that irregularities represent.

For external sample draw sites there are two main sources, logistic companies and local couriers. If incoming samples have high hemolysis rates, the laboratory could use monitoring systems offered by the carrier, such as GPS tracking, temperature monitors and vibration sensors. Monitoring shipments at regular intervals could identify conditions that contribute to hemolysis and make adjustments to remediate the issue. For local shipments, tracking courier deliveries is also viable. With the continued miniaturization of electronics, numerous sensors, such as temperature, vibration and GPS can fit into courier packages or attached to test tube racks. For a simpler solution, there are now solutions becoming commercially available where a small device can travel with the patient samples, collecting data along the way from sample pickup through to the diagnostic instrument.

Another way that trackers that mimic sample collection tubes are beneficial is in monitoring pneumatic tube systems (PTS). These systems have been shown to contribute to hemolysis. Regular monitoring of the PTS with a tracker can identify changes in speed and acceleration due to changes in vacuum pressure, in routes/branches. These trackers record data that can be helpful in monitoring automated centrifuges and track system vibration, providing the laboratory with additional information about the robustness of their system in protecting sample integrity. An additional way to help mitigate PTS-induced hemolysis is by setting up protocols, such as hand delivery, for samples that are hemolysis prone (e.g. cancer patients undergoing radiation therapy).

Even with improved monitoring and reduction in stressed samples caused by transportation, samples with hemolysis (H), and other interference issues such as icterus (I) and lipemia (L) will occur. Their potential impact on analytes such as potassium, sodium, chloride and bilirubin has been well documented. Therefore, being able to accurately identify and index how compromised samples are for HIL is key to assessing the viability and accuracy of certain analytes. Most chemistry analyzers now include an on-board HIL module which greatly simplifies the process and provides a significantly more accurate and repeatable indexing score. Besides chemistry analytes, some analytes measured by non-isotopic immunoassays (e.g. troponin and PSA) have been shown to be sensitive to hemolysis, so validating these sensitive IA assays for accuracy at different hemolysis levels and monitoring HIL levels for potentially impacted analytes is strongly recommended.

Automated indexing has greatly improved the consistency and accuracy of the indexing, but these systems are subject to drift.3 Optical elements are subject to potential interference from dust and changes in lighting and electronic components may wear, causing indexing values to shift, which leads to spurious clinical values.

While functions that are part of the analytical phase are regularly tested via quality controls, the same has not been the case for these pre-analytical HIL modules. Awareness of this shortcoming is increasing, and there are now commercial products that act to verify proper functioning of the HIL indexing with automated systems. While there are no explicit regulations around this practice, a European Federation for Clinical Chemistry and Laboratory Medicine (EFLM) working group authored a paper on the topic and recommended daily variation monitoring, in alignment with analytical quality control.4

Rethinking analytical quality control

The most common method of monitoring analytical phase performance is through the use of quality control (QC) material. Inadequate design of QC procedures can lead to erroneous patient test results being released without flagging QC or acceptable QC results being flagged. Reducing these errors can be achieved through a risk-based approach.

Evaluating QC material assesses the performance of test method operation for continued testing of patient specimens. Many laboratories still use a single QC rule, where a QC specimen is accepted if it is within two standard deviations (SD) of the QC mean. While this practice has the advantage of being simple, it has some fundamental issues that lead to errors.

In general, different analytes have different quality requirements. An analyte like iron has high biological variation and therefore, its acceptable quality specification is larger (the amount of variation acceptable without affecting patient care). In this case iron might be 20 percent. An analyte like sodium has a very small biological variation with a corresponding acceptable quality specification of <3 percent. If the same QC procedure is used for iron and sodium, then either sodium is being under-controlled, risking the likelihood of releasing erroneous results or iron is being over-controlled, risking too many false QC rejections, which leads to additional labor and material costs to investigate. Neither situation is optimal. Furthermore, the risk of patient harm from erroneous results is not the same across analytes, the clinical implications of an erroneous albumin result are less grave than the clinical implications of an erroneous troponin result.

A risk-managed approach to designing QC strategies allows the laboratory to develop a QC strategy (the QC rule, number of QCs per QC event and the number of patient specimens between QC events) that keeps the risk of producing erroneous results at an acceptable level. There are usually many different QC strategies that can satisfy a given risk requirement, which means that a laboratory can choose the strategy that is optimized for either using the least amount of QC material possible, or reducing the false rejection rate at the expense of increased QC consumption, but with lower costs associated with investigating QC rejections.

Designing a risk managed QC strategy is straightforward:

- Determine an acceptable risk of producing erroneous results

- Find QC strategies that have a lower risk of producing erroneous results than the acceptable risk

- From those QC strategies, select the one that has the lowest QC utilization rate with an acceptable false rejection rate.

Determining acceptable risk of patient harm from erroneous results for an analyte

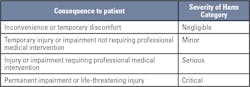

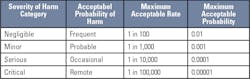

Two documents have guidance on determining acceptable risk of patient harm from erroneous results: CLSI EP-23 Laboratory Quality Control Based on Risk Management5 and ISO 14971 - Application of Risk Management to Medical Devices.6 These standards give examples of severity of harm categories to determine acceptable risk. There are five severity of harm categories, each with a corresponding consequence to a patient.

Initially, it is best to make the simplifying assumption that every erroneous result causes patient harm in order to provide the maximum acceptable probability for producing erroneous results for each severity of harm category.Predicting the probability of producing erroneous results for a QC strategy

Erroneous results can be produced in two circumstances – when the test method is “in-control” and when the test method is “out-of-control.” The probability of producing erroneous results for a test method is the combination of the probability of producing erroneous results when the test method is “in-control” and the probability of producing erroneous results when the test method is “out-of-control.”

Computing the “in-control” probability of producing erroneous results requires a quality specification, the means and standard deviations of the QC materials, and the corresponding reference targets. Computing an “out-of-control” probability of producing erroneous results additionally requires three pieces of information, the current QC strategy (number of QCs per QC event, QC rule and number of patients between QC events), the number of patient specimens per day and the reliability of the test, which is the mean time between “out-of-control” events.

Detailed guidance for estimating these probabilities for a given QC strategy on a test method can be found in publications by Curtis Parvin, PhD.7,8

Selecting an appropriate risk-managed QC strategy

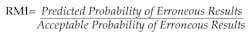

Before determining if a new QC strategy is required, the laboratory should determine if the current risk level is acceptable. A Risk Management Index (RMI) for a QC Strategy can be computed by dividing the predicted probability of producing erroneous results for a QC strategy by the acceptable probability of erroneous results for an analyte.

QC strategies that have an RMI of 1 or less have managed risk, QC strategies with an RMI > 1 do not have managed risk. If the Risk Management Index is greater than 1, a new QC strategy should be selected and the RMI recalculated.

Optimize the QC strategy for efficiency and effectiveness

A laboratory’s QC strategy should provide adequate control (effectiveness) at an acceptable price (efficiency). The only way to eliminate risk is to run a QC between every patient sample, an impractical option. Occasionally, the strategy that drives risk to the lowest possible level, requires QC more than is practical or affordable. The laboratory must then weigh the options. Every QC strategy has an expected time between false rejections - E(Tfr). For Risk Managed QC Strategies, there is a correlation between QC utilization and expected time between false rejections.

QC strategies that use the least amount of QC material generally have the least amount of time between false rejections (lowest E(Tfr)) , while the QC strategies with the longest time between false rejections (highest E(Tfr)) , use the most QC. This approach of looking at expected time between false rejections is in contrast to the more traditional QC design where performing more QC can increase the frequency of false rejections. This allows the laboratory to find a risk-managed QC strategy that has an acceptable expected time between false rejections at an acceptable cost. These computations can be performed manually or through commercial software tools.

Using post-analytical analysis for improving QA

Post-analytical analysis involves using reported patient and QC results in an ongoing effort to improve laboratory quality. Laboratories routinely obtain performance data in real time, but other analyses are available that allow a lab to evaluate whether systems are stable or subtle shifts are occurring but have yet to cause a failure. Two such indicators are analyte positivity rates and interlaboratory peer group QC programs. Monitoring positive rates can identify changes in test method performance, while an interlaboratory peer group program allows the laboratory to assess bias and monitor global and relative performance of test methods.

Positive rates

Some clinical analytes are directly associated with clinical conditions - like HbA1c with diabetes, troponin with myocardial infarction and tumor markers with the presence of oncological disorders. For such analytes, monitoring the rate of positives is a tool for discovering unexpected changes in test performance. If the patient population remains stable, the positive rates over time should remain relatively stable. If the rate of positives starts to drift or suddenly jumps, it could be an indication of a problem in the testing process.

Testing volume drives the length of time that positive rates should be computed. In general, 20 specimens are the smallest number that should be used for computing a positive rate. If there is sufficient data, computing positive rates on a weekly or bi-weekly basis is generally preferable to a rolling seven-day or 14-day computation, as it will incorporate day of the week fluctuations in the patient demographics. If there are no day of the week fluctuations in patient demographics, then rolling statistics are preferable.

For example, if the percentage of HbA1c results indicating diabetic patients jumps by 30 percent in one week and remains high, either something has happened to the patient population - perhaps a new diabetic clinic is sending their patients to the laboratory or some other change in the demographics of the patients being tested. In the absence of such a change, the possibility that a positive bias in the HbA1c test method has been introduced needs to be investigated.

For molecular testing of infectious diseases, positive rates should be tracked by the site of specimen collection if possible. Qualitative molecular testing is less likely to have a problematic bias than quantitative assays but can easily be subject to contamination. Significant changes in the positive rate for specimens collected at a specific site may indicate contamination issues that will persist until the contaminated collection site is identified and decontaminated.

Interlaboratory peer-group QC programs

Post-analytical analysis on QC data provides tremendous value to the laboratory for assessing and monitoring test method performance statistics, but to leverage the full value of QC testing, interlaboratory peer-group QC program participation is required. A laboratory can easily evaluate and monitor test method precision with their own QC data, but it is very difficult to assess bias without peer-group comparison.

Not only does statistical comparison with a peer group allow the laboratory to assess their bias with respect to the peer group, it also gives the laboratory a view of how the test method is performing in other laboratories. The peer-group comparison can compare the laboratory to others using the same test method on the same instrument type, or to other labs using the same general test-method, or to all labs testing the same analyte on the same lot of controls. This gives the laboratory an excellent view of how commutable the results are for the test method in use (assuming that the QC performance is indicative of patient specimen results).

Peer-group comparison also allows you to assess the laboratory’s performance in the context of their peers. If the laboratory is trying to improve their test method precision and their CV is already lower than 95 percent of the peers, they are unlikely to be successful. If 95 percent of their peers have better precision than their laboratory with the same test method, they should investigate why and take steps to improve the performance.

Assessing the bias and precision of their test method in the context of the performance of peer laboratories makes it easy to identify opportunities for improvement. Peer-group programs also offer the added ability to compare performance between test methods and are an excellent resource to consult when evaluating whether a test method is adequate when compared to other methods.

On a regular basis, performance of each test method in the laboratory should be evaluated in the context of the performance of the peer group and opportunities for improvement identified, particularly when the majority of the peers are achieving better bias or precision.

Conclusion

Several practices are presented that will help clinical laboratories reduce errors, improve quality and reduce costs:

- Pre-analytical phase

○ Routinely tracking and evaluating each part of the transportation chain, external and internal, will help drive down hemolysis rates. Specimen Transport Studies with data loggers can identify systems and routes that need improvement to reduce compromised samples.

○ Using an HIL indexing control at regular intervals ensures that electronic specimen integrity checks are working and identifies when these systems are operating outside of specifications.

- Intra-analytical phase

○ Implementing a risk-managed QC strategy ensures that the QC system is optimized for the laboratory’s testing needs and that the lab is not over-controlling or under-controlling. It also allows the laboratory to make management decisions about the false rejection rate of their C procedures and improve it with additional QC evaluations if desired.

- Post analytical phase

○ Monitoring an analyte’s positive rates allows the laboratory to identify critical changes in patient outcomes that may indicate a test method problem if it is not related to changes in the patient population.

○ Participating in an interlaboratory peer-group QC program allows the laboratory identify bias problems and opportunities for test method improvement.

References

- Lippi G, von Meyer A, Cadamuro J, Simundic AM. Blood sample quality. Diagnosis (Berl). 2019;6(1):25-31.

- Plebani M. Exploring the iceberg of errors in laboratory medicine. Clin Chim Acta. 2009;404(1):16-23.

- Green SF. The cost of poor blood specimen quality and errors in preanalytical processes. Clin Biochem. 2013;46(13-14):1175-1179.

- Simundic AM, Baird G, Cadamuro J, Costelloe SJ, Lippi G. Managing hemolyzed samples in clinical laboratories. Crit Rev Clin Lab Sci. 2020;57(1):1-21.

- Clinical and Laboratory Standards Institute. Laboratory Quality Control Based on Risk Management; Approved Guideline. 1st ed. CLSI Guideline EP23-A. Wayne, PA: Clinical and Laboratory Standards Institute; 2011.

- International Organization for Standardization. Medical Devices—Application of Risk Management to Medical Devices. ISO 14971. Geneva, Switzerland: International Organization for Standardization; 2007.

- Yundt-Pacheco JC, Parvin CA. Computing a risk management index: correlating a quality control strategy to patient risk. ClinChem. 2017;63:s227-s228.

- Parvin CA, Baumann NA. Assessing quality control strategies for HbA1c measurements from a patient risk perspective. J Diabetes Sci Technol. 2018;12(4):786-791.

About the Author

John Yundt-Pacheco MSCS

is the Senior Principal Scientist at the Quality Systems Division of Bio-Rad Laboratories. He leads the Informatics Discovery Group, doing research in quality control and patient risk issues. He has had the opportunity to work with laboratories around the world — developing real time, inter-laboratory quality control systems, proficiency testing systems, risk management and other quality management systems.

Mark Gersh, MBA

is Senior Manager in the Market Development team at Bio-Rad Laboratories.